When a monarch quietly hands the world’s most powerful AI chipmaker a letter about artificial intelligence risks, it is more than a polite royal gesture. It is a political signal.

At the 2025 Queen Elizabeth Prize for Engineering ceremony at St James’s Palace, King Charles III presented Nvidia CEO Jensen Huang with an award for pioneering the GPUs that power today’s AI boom. Then he did something unusual: he told Huang, “I need to talk to you,” and personally handed him a letter on AI safety.The Independent

That moment, now circulating in tech and business media, sits at the intersection of royalty, geopolitics, and AI regulation. Let’s unpack what happened, what was in the King Charles AI letter, and what it tells us about how political leaders are trying to shape the AI safety narrative.

What actually happened at the Queen Elizabeth Prize ceremony?

Event:

- Location: St James’s Palace, London

- Occasion: 2025 Queen Elizabeth Prize for Engineering (often called the “Nobel prize for engineering”).The Independent+1

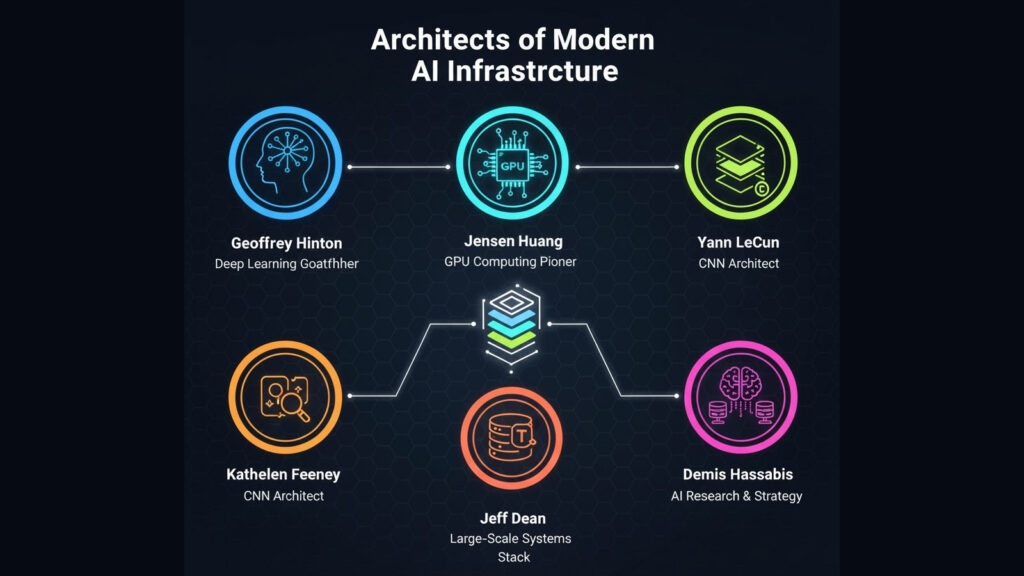

- Key figures: King Charles III, Nvidia CEO Jensen Huang, Nvidia Chief Scientist Bill Dally, and other AI pioneers including Geoffrey Hinton, Yoshua Bengio, Yann LeCun, Fei-Fei Li, and John Hopfield.The Independent

Why Huang and Dally were honoured:

They were recognized for their contributions to accelerated computing and GPU architectures that underlie modern machine learning and generative AI systems.NVIDIA Blog

The letter moment:

- Huang recounted that the King told him, “There’s something I want to talk to you about,” then handed him a letter.

- Inside was a copy of the King’s speech on AI safety, originally delivered at the 2023 UK AI Safety Summit at Bletchley Park.Technology Magazine+2The Independent+2

- Huang later told reporters and the BBC that the King “obviously cares very deeply about AI safety” and wants to ensure AI is used for good, not harm.Technology Magazine+1

Symbolically, this is powerful. The King Charles AI letter was handed to the CEO of the company whose Nvidia GPUs are the backbone of global AI infrastructure, at a ceremony designed to celebrate the very technology that is causing concern.

What was in King Charles’ AI letter?

We do not have a new, secret policy document here. Multiple reports say the letter contained:

A copy of King Charles’s 2023 speech on AI safety, delivered at the UK AI Safety Summit at Bletchley Park.Technology Magazine+2Perplexity AI+2

In that 2023 address, Charles called for:Technology Magazine+1

- Urgency in addressing AI risks

- Unity and collective strength among nations

- AI that benefits humanity while guarding against misuse and loss of control

His main themes, echoed again by handing this letter to Huang, include:

- AI as a dual-use technology

- Capable of enormous economic and scientific benefits.

- Also capable of enabling disinformation, cyber attacks, and destabilising systems if misused.

- Existential and systemic risk awareness

- Charles has previously referenced long-term dangers from unaligned or uncontrolled AI, aligning with broader debates on existential risk.Wikipedia

- Shared responsibility between governments and industry

- He stresses that tech leaders and policymakers must collaborate, not treat AI safety as someone else’s problem.

So in effect, the content of the letter is not new; the recipient is. The message is being personally delivered to the CEO of the world’s most strategically important AI hardware supplier.

Why Jensen Huang and Nvidia matter in AI governance

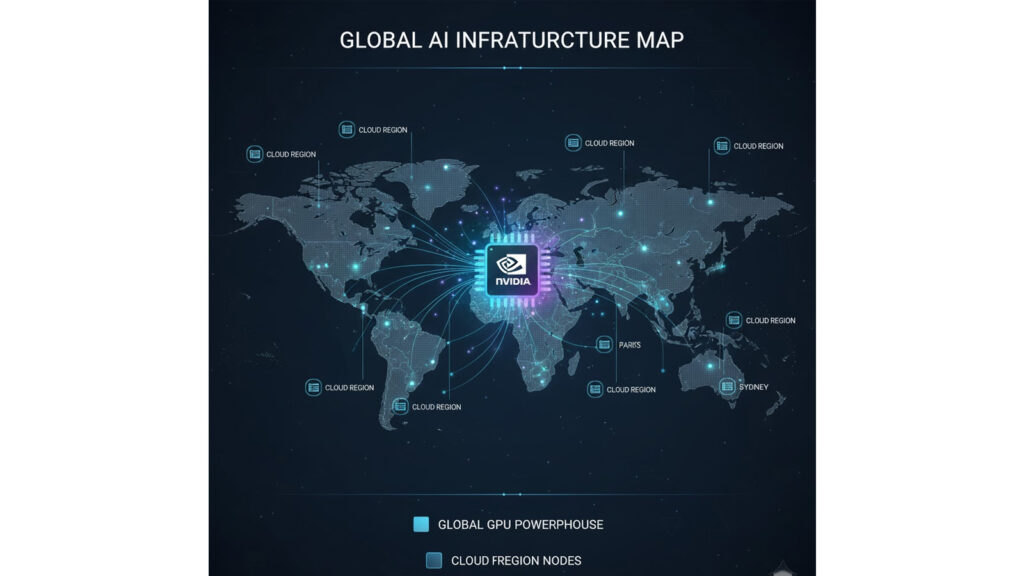

If you care about AI governance in the UK or globally, Nvidia is not just another tech company.

Nvidia’s leverage in AI

- Nvidia GPUs power most leading AI models and clouds. From OpenAI and Anthropic to Google, Meta, and countless startups, everyone is dependent on Nvidia hardware.NVIDIA Blog+1

- Microsoft, for example, is building data centres specifically to host “hundreds of thousands” of Nvidia GPUs to scale frontier AI.AInvest

That means Nvidia sits upstream of almost all AI capability development. Decisions about who gets high-end GPUs, under what conditions, and with what safeguards, can shape the pace and geography of AI progress.

Why a royal letter matters to a chip company

By handing this letter directly to Jensen Huang, King Charles is doing three things at once:

- Recognising Nvidia’s centrality

The award publicly frames Nvidia as a global engineering hero for enabling AI, not just a vendor of gaming chips. - Signalling responsibility at the infrastructure layer

The letter says, in effect: If your GPUs power the world’s AI, then you share responsibility for its safety. - Bridging soft power and hard infrastructure

This is soft power from the British monarchy directed at a company that controls a crucial piece of global digital infrastructure.

For AI policy watchers, this is a clear sign that regulators and leaders do not see AI safety as only a “model lab” issue. It is an infrastructure issue, starting with Nvidia GPUs.

How political leaders are shaping the AI safety narrative

King Charles is not a legislator, but the monarchy has agenda-setting influence, especially in the UK. His letter fits into a broader pattern of how political leaders are trying to frame AI risk and AI safety policy.

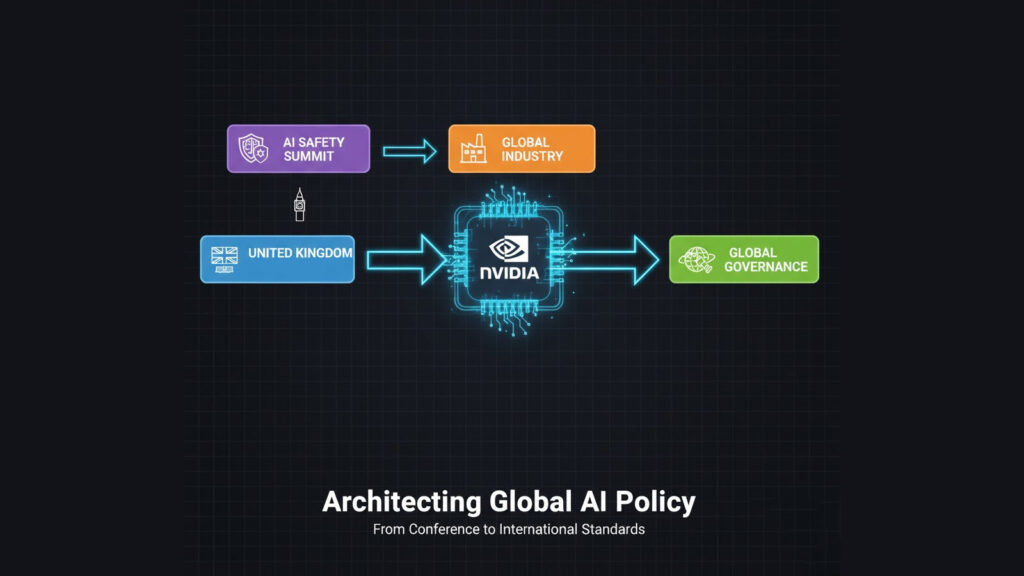

1. The UK’s “AI safety champion” strategy

The UK has been actively branding itself as a global hub for AI governance and safety, not just AI startups:

- It hosted the 2023 AI Safety Summit at Bletchley Park, bringing together US, EU, China, and major AI labs to discuss frontier model risks.Wikipedia+1

- The government has set up dedicated AI Safety Institutes and is experimenting with pre-deployment testing of advanced models.Wikipedia

King Charles repeatedly speaking on AI and re-circulating his safety speech through this letter helps keep that narrative alive: the UK is the place where AI safety and ethics are integral to the AI story.

2. Balancing innovation and risk in public messaging

The ceremony itself captures a classic political balancing act:

- On one hand, the Queen Elizabeth Prize for Engineering celebrates AI as an innovation engine, honouring Huang, Dally, Li, Hinton, Bengio, LeCun, and Hopfield.The Independent+1

- On the other, the King uses the same platform to warn of “a lot of bad actors around” and the rapid pace of new technologies.The Independent

This is becoming the standard template for AI governance speeches:

“AI is transformative and vital for growth, but we must act responsibly and manage risks.”

The letter simply compresses that template into a physical symbol and delivers it to the most influential actor in AI hardware.

3. Reframing AI as a shared moral responsibility

By choosing to send his own speech rather than a government white paper, King Charles is attaching a moral dimension to AI risk:

- It feels less like regulation and more like ethical stewardship.

- It positions AI risk as something that leaders of companies should feel personally responsible for, not just legally bound by.

This dovetails with broader AI safety narratives that emphasise corporate responsibility, internal governance, and culture, not just compliance checklists.

What does this signal for future AI governance in the UK?

The King’s letter on AI safety is symbolic, but symbols often foreshadow policy.

Here is what it likely signals for AI governance in the UK and beyond:

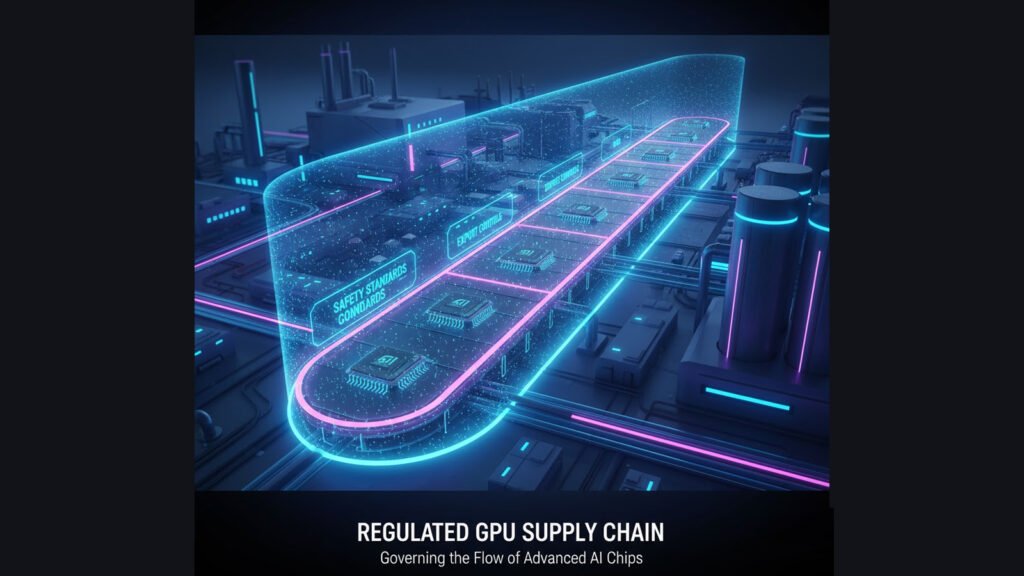

1. More attention on upstream controls (like Nvidia GPUs)

Expect growing debate about whether access to advanced GPUs should be:

- Tracked or licensed above certain performance thresholds

- Tied to safety commitments, audits, or risk reporting

- Subject to export controls in sensitive or high-risk contexts

The US has already used export controls to restrict some Nvidia chips from being sold to certain countries.Wikipedia The UK and its partners could push further, framing advanced compute as a regulated critical resource for frontier AI.

2. Continued UK positioning as a convenor of AI safety

The King’s gesture keeps the UK’s Bletchley Park moment alive in public memory. The likely direction:

- More multilateral forums on AI risk hosted in the UK

- Deeper collaboration between UK regulators and leading firms like Nvidia, OpenAI, DeepMind, Anthropic, etc.

- Efforts to align AI safety policy across G7 and other blocs, with the UK acting as a bridge.Wikipedia

3. Stronger expectations on corporate AI governance

By personally nudging Huang, the monarchy is backing a norm that tech CEOs should:

- Publicly discuss AI risks, not just opportunities

- Invest in internal AI safety teams, red-teaming, and alignment research

- Engage directly with policymakers and civil society on risks like misuse, disinformation, and systemic failures

In practice, this aligns with growing calls for companies to publish AI safety policies, adopt model cards, participate in standard-setting bodies, and cooperate with regulators.

So, is this “King Charles vs. AI”?

Not exactly. It is more “King Charles vs. unmanaged AI risk.”

From Huang’s own comments, there is no adversarial tone. He framed the King as someone who:Technology Magazine+1

- Believes strongly in AI’s potential to “revolutionise the UK and the world”

- Wants to ensure the technology is advanced responsibly

- Cares enough to personally remind the world’s top AI hardware CEO about the stakes

The subtext for industry is clear though:

If you build the tools that power AI everywhere, you own a share of the risk. And leaders are watching.

What this means for anyone following AI safety policy

If you are tracking AI safety policy or AI governance in the UK, here is why the King Charles AI letter matters:

- It anchors AI risk in the mainstream.

This is not a niche debate among researchers. It is a subject for heads of state, monarchs, and CEOs. - It highlights infrastructure-level accountability.

Nvidia GPUs and other compute infrastructure are becoming key levers in AI governance, not just an engineering detail. - It reinforces the UK’s safety-first branding.

The monarchy is now part of the story the UK tells the world: Britain is where AI’s benefits are celebrated and its risks confronted. - It previews future regulatory directions.

Expect more discussion about compute controls, mandatory safety testing, and more structured engagement between governments and AI suppliers.

Conclusion: A quiet but loud moment in AI politics

The sight of King Charles smiling beside Jensen Huang while handing him one of the world’s top engineering honours says one thing: AI is the pride of modern engineering.

The quiet handover of a personal letter about AI dangers says another: AI is also a strategic risk that demands serious governance.

Taken together, the ceremony is a microcosm of where we are with AI in 2025:

- Enthralled by its promise

- Increasingly anxious about its power

- And beginning to understand that those who control the chips, data centres, and models sit at the heart of global AI politics

The King’s letter to Nvidia’s CEO may not create new law. But it sends a message that will echo in boardrooms and policy circles:

The age of AI is here, and so is the expectation that those who build it will help govern it.